Centos7基于Containerd部署Kubernetes v1.23.5

Centos7基于Containerd部署Kubernetes v1.23.5

# Containerd部署Kubernetes v1.23.5

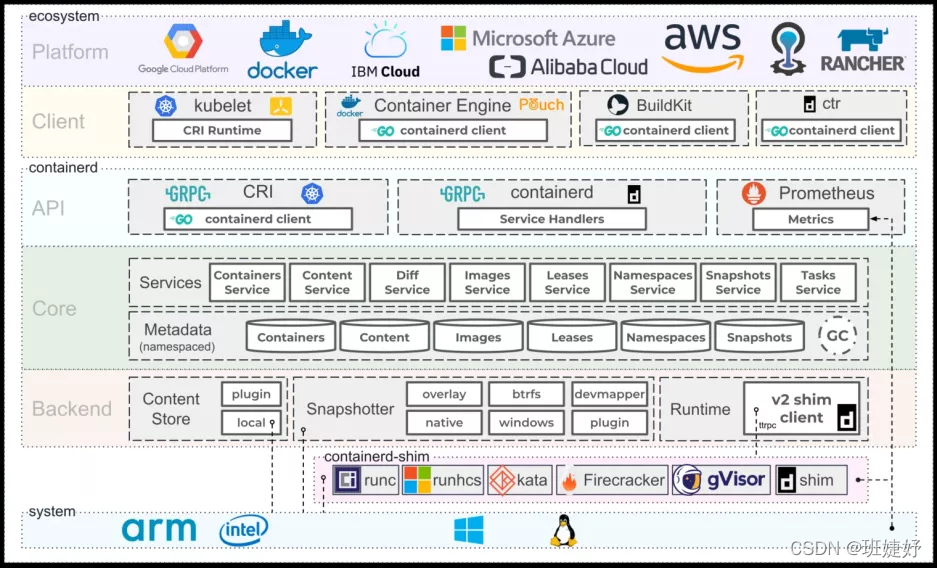

# Containerd简介

containerd 是一个工业级标准的容器运行时,它强调简单性、健壮性和可移植性。其诞生于Docker,提供如下功能:

管理容器的生命周期(从创建容器到销毁容器)拉取/推送容器镜像存储管理(管理镜像及容器数据的存储)调用 runc 运行容器(与 runc 等容器运行时交互)管理容器网络接口及网络

# containerd架构图

# 集群要求

- 一台或者多台服务器,操作系统

Centos7.9 - 集群之间所有机器要互通 需要

关闭防火墙 - 可以

访问外网,需要拉取镜像 禁止swap分区- 配置

Ipvs以及iptables路由转发 - 使用

kubeadm搭建集群: apiserver、etcd、controller-manager、scheduler、kubelet(systemd守护进程管理,其他组件都是采用容器部署)、kube-proxy

| IP地址 | 主机名称 | 操作系统 | 底层容器 |

|---|---|---|---|

| 10.11.121.111 | k8s-master | CentOS-7.9 | containerd |

| 10.11.121.112 | k8s-node1 | CentOS-7.9 | containerd |

| 10.11.121.113 | k8s-node2 | CentOS-7.9 | containerd |

# 搭建Kubernetes(准备环境)

准备环境:

请在所有的节点上进行操作

# 1.查看当前的主机内核

当前使用的是CentOS7.9的内核,如果需要升级到5.17+则需要三个节点更新内核,这里暂时不更新

修改每个节点的主机名称

[root@k8s-master ~]# uname -r

3.10.0-1160.el7.x86_64

[root@k8s-master ~]# hostnamectl

Static hostname: k8s-master

Icon name: computer-vm

Chassis: vm

Machine ID: 6a4d2edbefd14658af278cd43366c8c2

Boot ID: a25edbef987a42bb8e5757ab42278501

Virtualization: vmware

Operating System: CentOS Linux 7 (Core)

CPE OS Name: cpe:/o:centos:centos:7

Kernel: Linux 3.10.0-1160.el7.x86_64

Architecture: x86-64

# 2.配置host

配置三个节点的主机映射,也可以方便后续使用NTP时间服务器

[root@k8s-master ~]# vim /etc/hosts

10.11.121.111 k8s-master

10.11.121.112 k8s-node1

10.11.121.113 k8s-node2

# 3.配置yum源

这里使用阿里的CentOS7的YUM源

要先删除本地的所有repo文件之后,拉取新的镜像源,然后执行 yum makecache fast清除缓存

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

或者

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# 4.关闭防火墙

这里不需要开启防火墙,包括seLinux所以需要关闭永久关闭防火墙以及seLinux,然后重启系统

[root@k8s-master ~]# systemctl stop firewalld && systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@k8s-master ~]# setenforce 0

[root@k8s-master ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

# 5.关闭swap

关闭系统交换分区swap(k8s要求关闭swap)

可以使用swapoff -a暂时关闭,也可以通过修改配置文件注释掉/etc/fstab中的swap进行永久关闭。

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# vi /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

#确认swap是否被禁用(输出空值即为禁用)

[root@k8s-master ~]# cat /proc/swaps

Filename Type Size Used Priority

# 6.加载所需内核模块

这里是Kubernetes V1.20+以上需要开启

[root@k8s-node1 ~]# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

[root@k8s-node1 ~]# modprobe overlay

[root@k8s-node1 ~]# modprobe br_netfilter

# 7.配置时间同步

使用ntp同步阿里云的时间这里不做详细说明

[root@k8s-master ~]# yum install -y ntpdate

[root@k8s-master ~]# ntpdate ntp.aliyun.com

18 Apr 09:48:20 ntpdate[1745]: step time server 203.107.6.88 offset 1.334239 sec

# 8.使用IPvs模式

加载ipvs模块(可选项,默认为iptables模式)

kuber-proxy代理支持iptables和ipvs两种模式,如果使用ipvs模式需要在初始化集群前所有节点加载ipvs模块并安装ipset工具,Linux kernel 4.19以上的内核版本使用nf_conntrack代替nf_conntrack_ipv4

此外还需要安装ipset、ipvsadm相关的软件包

[root@k8s-master ~]# cat /etc/modules-load.d/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

[root@k8s-master ~]# chmod 755 /etc/modules-load.d/ipvs.modules

[root@k8s-master ~]# bash /etc/modules-load.d/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 15053 0

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 139264 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack

[root@k8s-master ~]# yum install -y ipset ipvsadm

# 9.配置Iptables路由转发

设置sysctl 参数,允许iptables检查桥接流量,这些参数在重新启动后仍然存在

[root@k8s-master ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@k8s-master ~]# sysctl --system

# 10.配置Kubernetes的源

所有节点配置kubernetes软件源(使用清华大学镜像源)

当前的阿里云的Kubernetes的源以及华为的Kubernetes源经常性出现问题,所以使用清华大学的。

配置Kubernetes的yum源

[root@k8s-master ~]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.tuna.tsinghua.edu.cn/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

安装kubernetes相关的软件

不指定默认版本就是最新,可以指定相对应的版本。

[root@k8s-master ~]# yum install -y kubeadm kubelet kubectl

#指定版本号安装

[root@k8s-master ~]# yum install -y kubelet-1.23.1 kubeadm-1.23.1 kubectl-1.23.1

[root@k8s-master ~]# systemctl enable kubelet && systemctl start kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

# 配置Containerd环境

配置kubelet使用containerd作为容器运行时,指定cgroupDriver为systemd模式(两种方法实现)

# 方法一

在Master节点上配置

配置kubelet使用containerd(所有节点都要配置cgroup-driver=systemd参数,否则node节点无法自动下载和创建pod)

[root@k8s-master ~]#cat > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS=--container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd

EOF

# 方法二

在Master节点上配置

如果不想修改/etc/sysconfig/kubelet配置,kubeadm init必须使用yaml文件来初始化传递cgroupDriver参数,可以通过如下命令导出默认的初始化配置

[root@k8s-master ~]# kubeadm config print init-defaults > kubeadm-config.yaml

然后根据自己的需求修改配置:

- 配置

advertiseAddress参数为master的节点IP地址 - 配置

criSocket参数为/run/containerd/containerd.sock - 配置

taints参数给当前master节点添加污点 - 配置

imageRepository的参数为registry.aliyuncs.com/google_containers,当前为阿里的仓库 - 配置

kube-proxy的模式为ipvs - 添加

podSubnet为10.244.0.0/16 - 使用containerd作为运行时,所以在初始化节点的时候需要指定cgroupDriver为systemd模式

[root@k8s-master ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# 添加IP地址

advertiseAddress: 10.11.121.111

bindPort: 6443

nodeRegistration:

# 修改为containerd的容器底层

criSocket: /run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: k8s-master

# 添加Master的污点

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

# 修改为阿里云的镜像地址

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.23.0

networking:

dnsDomain: cluster.local

# 添加Pod的网段

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

# 添加kube-proxy的模式为IPvs

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

# 添加containerd的驱动

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

# 部署Containerd

Containerd:

请在所有节点上部署

# 1.安装containerd

所有节点配置containerd软件源(containerd组件默认在docker-ce源中)

使用yum search可以查看当前的containerd的版本有哪些

[root@k8s-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 查看containerd.io可用版本

[root@k8s-master ~]# yum search containerd.io --showduplicates

[root@k8s-master ~]# yum -y install containerd.io

# 2.修改配置文件

- pause镜像地址

- Cgroup驱动改为systemd

- 增加runsc容器运行时

- 配置docker镜像加速器

修改配置文件默认的containerd的配置文件是空的,所以需要重新生成一个完整的配置文件。

containerd的目录是在 /etc/containerd

[root@master01 ~]# cd /etc/containerd/

[root@master01 containerd]# containerd config default > config.toml

[root@master01 containerd]# vim config.toml

43 [plugins."io.containerd.grpc.v1.cri"]

····

# 修改镜像为国内的镜像

56 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.2"

····

# 添加一个runsc,指定类型为runsc,这里是跟runc同级

90 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runsc]

91 runtime_type = "io.containerd.runsc.v1"

# 修改systemd驱动为true

94 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

103 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

···

114 SystemdCgroup = true

# 添加docker仓库的镜像源,配置阿里镜像加速

139 [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

140 [plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

141 endpoint = ["https://b9pmyelo.mirror.aliyuncs.com"]

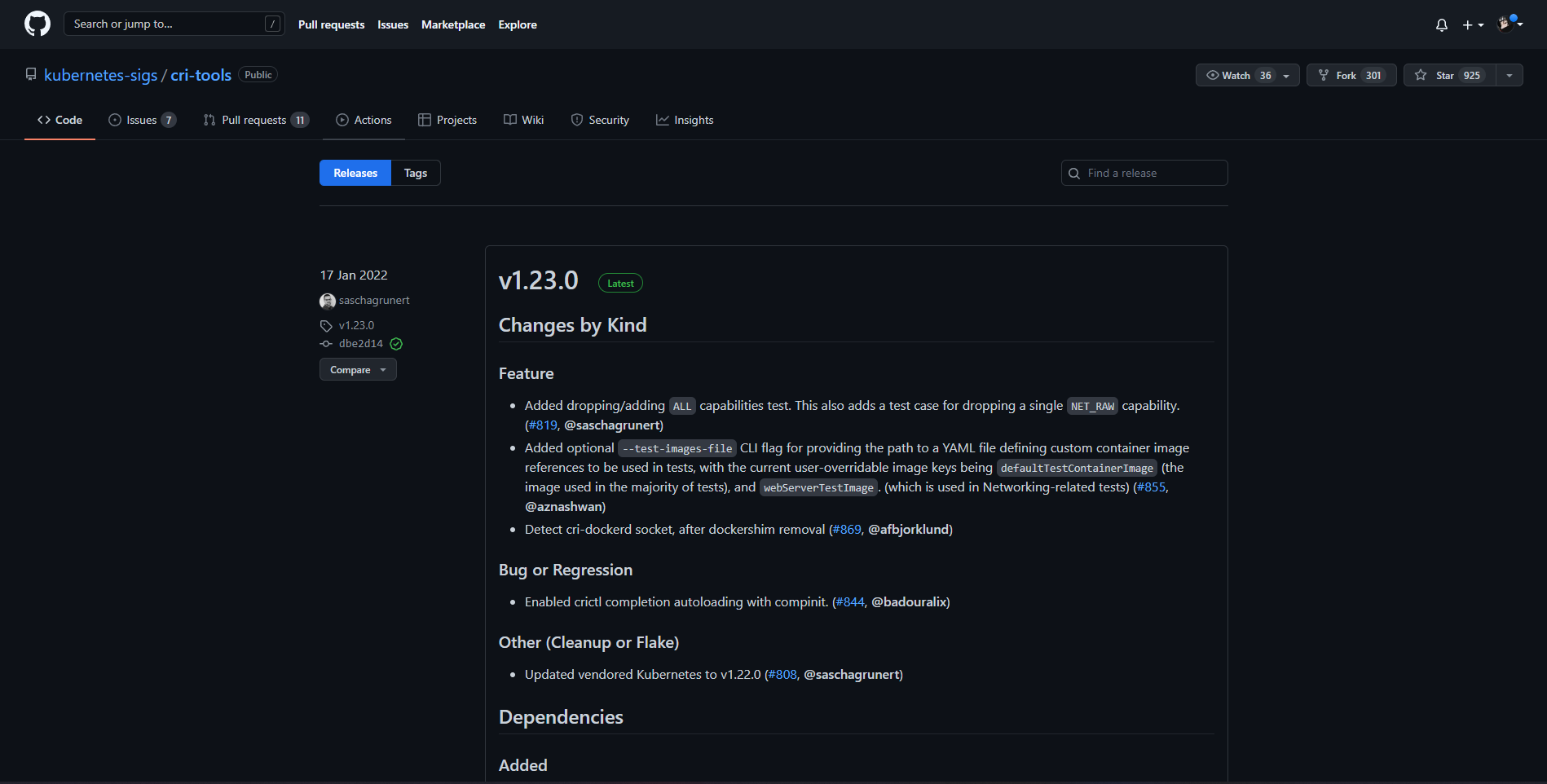

# 3.CRI客户端工具crictl

工具下载地址:https://github.com/kubernetes-sigs/cri-tools/releases/

[root@k8s-master ~]# wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.23.0/crictl-v1.23.0-linux-amd64.tar.gz

[root@k8s-master ~]# tar zxvf crictl-v1.23.0-linux-amd64.tar.gz -C /usr/local/bin/

[root@k8s-master ~]# crictl --version

crictl version v1.23.0

添加crictl连接containerd的配置文件

[root@k8s-master ~]# cat /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable containerd && systemctl restart containerd

或执行以下语句添加参数

[root@k8s-master ~]# crictl config runtime-endpoint unix:/run/containerd/containerd.sock

拉取镜像验证是否成功

[root@k8s-master ~]# crictl pull nginx

Image is up to date for sha256:605c77e624ddb75e6110f997c58876baa13f8754486b461117934b24a9dc3a85

[root@k8s-master ~]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/library/nginx latest 605c77e624ddb 56.7MB

# 在master节点启动集群

# 1-1使用kubeadm命令部署

–-apiserver-advertise-address集群通告地址–-image-repository由于默认的拉取镜像地址k8s.gcr.io国内无法访问,这里使用阿里云镜像仓库地址–-kubernetes-versionk8s的版本–-service-cidr集群内部虚拟网络,Pod的统一访问入口–-pod-network-cidrPod的网络,与下面部署的CNI网络组件yaml中保持一致

[root@k8s-master ~]# kubeadm init \

--apiserver-advertise-address=10.10.10.128 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.23.1 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all

# 1-2使用配置文件启动集群

这里我使用配置文件初始化Kubernetes集群

[root@k8s-master ~]# kubeadm init --config=kubeadm-config.yaml

···

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

#非root用户

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

#root用户

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.11.121.111:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:637d4427138d3ca87f32bf57e3a16e85cc806cbbf2dc25b475cf0bec9504a95f

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 2.部署容器网络CNI

Calico是一个纯三层的数据中心网络方案,Calico支持广泛的平台,包括Kubernetes、OpenStack等。

Calico 在每一个计算节点利用 Linux Kernel 实现了一个高效的虚拟路由器( vRouter) 来负责数据转发,而每个 vRouter 通过 BGP 协议负责把自己上运行的 workload 的路由信息向整个 Calico 网络内传播。

此外,Calico 项目还实现了 Kubernetes 网络策略,提供ACL功能。

'拉取Pod网络插件'

'原因:coredns一直停滞在Pending,需要手动拉取, 最后选择的网络插件是:calico。'

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d8c4cb4d-44wlk 0/1 Pending 0 16s

kube-system coredns-6d8c4cb4d-dvjms 0/1 Pending 0 16s

kube-system etcd-k8s-master 1/1 Running 0 36s

kube-system kube-apiserver-k8s-master 1/1 Running 0 28s

kube-system kube-controller-manager-k8s-master 1/1 Running 0 27s

kube-system kube-proxy-qtmsd 1/1 Running 0 17s

kube-system kube-scheduler-k8s-master 1/1 Running 0 33s

部署calico网络组件(默认模式)

[root@k8s-master ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

再次查看当前的Pod启动情况:

[root@k8s-master ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-7c845d499-qr7cw 1/1 Running 0 99m

kube-system calico-node-288zk 1/1 Running 0 99m

kube-system calico-node-7dhnc 1/1 Running 0 97m

kube-system calico-node-z78p4 1/1 Running 0 97m

kube-system coredns-6d8c4cb4d-44wlk 1/1 Running 0 100m

kube-system coredns-6d8c4cb4d-dvjms 1/1 Running 0 100m

kube-system etcd-k8s-master 1/1 Running 0 100m

kube-system kube-apiserver-k8s-master 1/1 Running 0 100m

kube-system kube-controller-manager-k8s-master 1/1 Running 0 100m

kube-system kube-proxy-qtmsd 1/1 Running 0 100m

kube-system kube-proxy-whjpj 1/1 Running 0 97m

kube-system kube-proxy-xnjs9 1/1 Running 0 97m

kube-system kube-scheduler-k8s-master 1/1 Running 0 100m

[root@k8s-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

# 3.加入集群

在master初始化集群之后,复制kubeadm join字段

[root@k8s-node1 ~]# kubeadm join 10.11.121.111:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:637d4427138d3ca87f32bf57e3a16e85cc806cbbf2dc25b475cf0bec9504a95f

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# 4.验证集群

- 查看当前容器的底层是否为containerd

- 查看当前集群是否使用ipvs的模式

- 查看ipvs转发状态

[root@k8s-master ~]# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane,master 120m v1.23.5 10.11.121.111 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.5.11

k8s-node1 Ready <none> 117m v1.23.5 10.11.121.112 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.5.11

k8s-node2 Ready <none> 117m v1.23.5 10.11.121.113 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.5.11

[root@k8s-master ~]# kubectl -n kube-system get cm kube-proxy -o yaml | grep mode

mode: ipvs

[root@k8s-master ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 10.11.121.111:6443 Masq 1 7 0

TCP 10.96.0.10:53 rr

-> 10.244.235.194:53 Masq 1 0 0

-> 10.244.235.195:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.235.194:9153 Masq 1 0 0

-> 10.244.235.195:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.235.194:53 Masq 1 0 0

-> 10.244.235.195:53 Masq 1 0 0